Why we need to stop asking tech CEOs about the future of AI

And why human behaviour experts urgently need to enter the conversation.

Hi all,

It’s been a while! Apologies for my delayed absence, there’s been a lot happening behind the scenes — including officially handing in the manuscript for my book on intuition, which comes out later this year/early next. You might notice this newsletter has a new look.

What was previously known as Future Minds Fridays is now simply Future Minds, and I’ve also moved over to Substack for a more sleek, editorial look. While I won’t exclusively be posting on Fridays anymore, you can continue to look forward to thought-provoking content on mental imagery, aphantasia, intuition, and technology.

This week, I’m talking about a topic I’m extremely passionate about: the intersection of AI and mental health, and why human behaviour experts need to step up and take a stance. I hope you enjoy!

There's a particular question the media loves to ask the CEOs of AI companies. It usually goes something like this: "How do you think AI will affect humanity in the future?"

If you think about it, this question is a bit like asking a fish to describe what life is like on dry land. With all due respect, how the hell would they know? They're not qualified to comment on the ‘human’ part of the equation. They're not neuroscientists. They're not psychologists. They're technologists. They might know the ins and outs of AI. But humans? Not so much.

Let's be clear: this is not to say that these tech CEOs aren't brilliant — they absolutely are. But they are brilliant at very specific things. They can talk to you about algorithms, data structures, and deep learning until they're blue in the face. But when it comes to predicting how these technologies will interact with the intricacies of the human mind and society, they're out of their depth. And, their overrepresentation in these conversations is problematic for a few reasons:

They’re biased

Consider this: If you're the CEO of an AI company, you've got a vested interest in the success and proliferation of AI. This, my friends, is what we call a conflict of interest.

A CEO, when asked about the impact of AI on society, is likely to paint a rosy picture — not because they're devious or dishonest, but because they're biased. Like all of us, heuristics muddy their thinking processes. It's human nature. It's like asking a parent if their kid is the smartest in the class. Of course, they'll say yes. Does this make it true? Not necessarily.

Don’t even get me started on the doomsday prophecies and the ‘AI will wipe out humanity’ narratives. Often, these extinction-level predictions are peddled by those same AI companies as PR exercises to create hype, generate more clicks and sell more products. Yes, the call is coming from inside the house.

They’re too focused on the horizon

But, the irony here is so thick you could cut it with a knife. The real danger isn't some far-off AI rebellion you’d see in a sci-fi movie. It's AI in the hands of humans right here, right now.

Think about it. While we're fretting over future AI uprisings, we're turning a blind eye to the immediate threats posed by AI. We're glossing over how the misuse of AI is spreading disinformation, driving political division, manipulating consumer behaviour, and even weaponising social media.

We're so focused on preventing AI from gaining consciousness and wreaking havoc that we're missing the havoc it's already causing when wielded irresponsibly by humans. Essentially, we’re missing the trees for the forest. This is like worrying about a future flood while ignoring the fact that your house is on fire.

The mental health effects we don't see coming are the ones that will really cause damage. Just as we didn’t expect social media to lead to depression, anxiety and depression (especially amongst young people), we don’t expect AI gadgets and apps to mess with our minds. But guess what? They are. And they're doing it quietly, subtly, behind the scenes. We’ve already seen a preview of this in action, when a Belgian man took his own life after a chatbot called Chai told him to.

So, what happens when AI becomes even more integrated into our lives? What happens when we begin to rely on AI not just for information, but for companionship, validation, and mental and emotional support? What happens when we replace human interaction with AI interaction, which is already beginning to happen with ‘virtual partner’ chatbots like Replika and CarynAI? We can't predict exactly, but if history is any guide, it won't be all sunshine and rainbows.

They’re answering the wrong question

Here's the thing: AI is a tool. And like any tool, it's only as good or as bad as the person wielding it. We are the ones shaping AI, and we are the ones who will be shaped by it.

So, let's stop asking how AI will affect humanity, and start asking a far more important question: How will we choose to use AI to affect humanity?

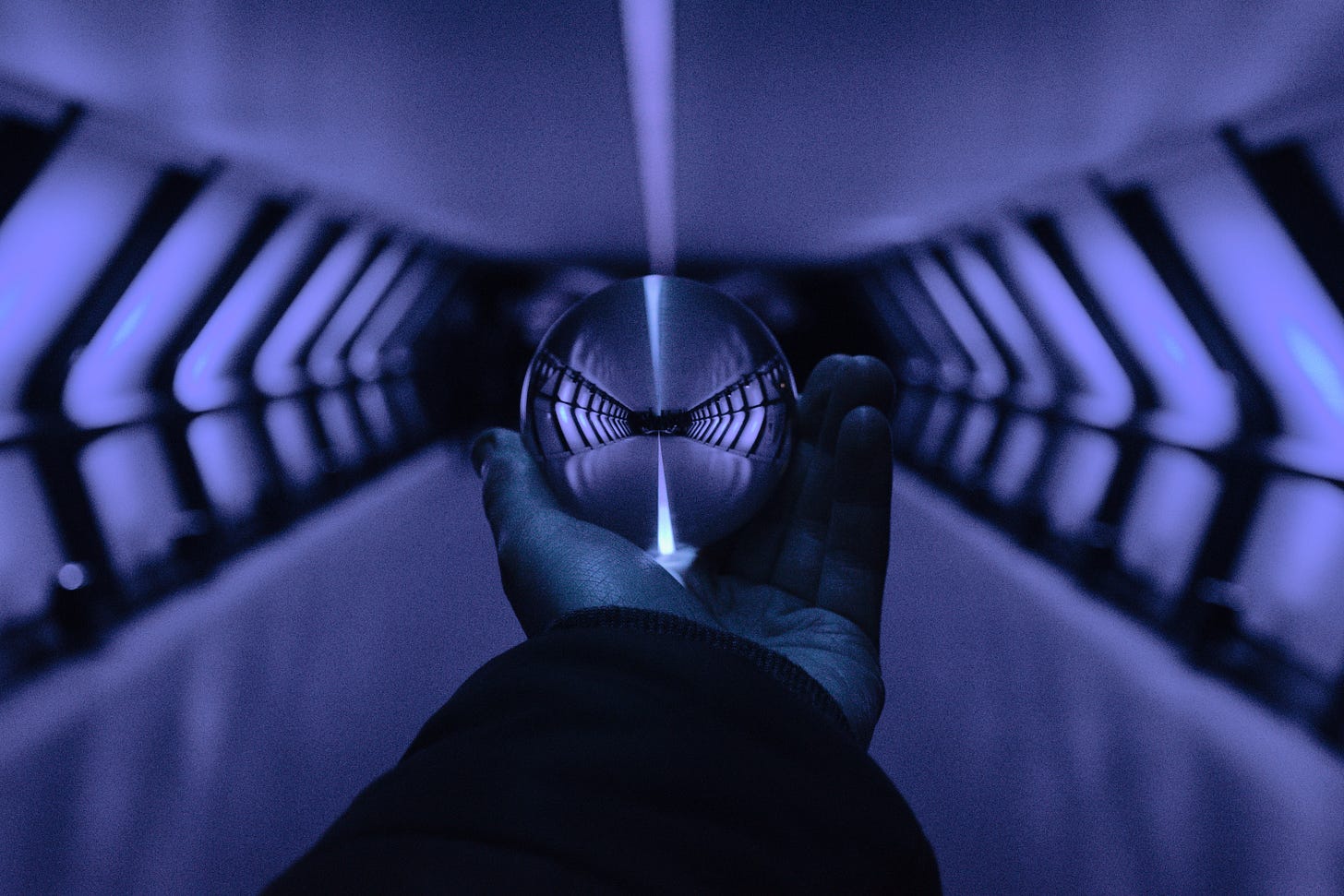

It's time to change the conversation about AI. Instead of asking CEOs to gaze into their crystal balls and predict the future, let's bring psychologists, neuroscientists, sociologists, ethicists, and other experts into the dialogue. Let's stop obsessing over sci-fi scenarios and start addressing the real and present dangers. Let's consider the unseen impacts of AI on our mental health and start taking preventative measures.

A call to arms for human behaviour experts

So, here's my rallying cry to all the psychologists, neuroscientists, and human behaviour experts out there:

It's high time to step up, to raise your voices and make them heard in this cacophonous arena of AI discussions.

You're the ones who understand the human mind. You're the ones who can help us comprehend the nuanced psychological and neurological impacts of AI. You're the ones who can guide us to harness the power of AI in a way that enhances our quality of life, rather than detracts from it.

Don't just sit on the sidelines while technologists and business tycoons dictate the narrative. Don't wait for the media to come knocking at your door. Take the initiative. Write articles, hold webinars, appear on podcasts, create YouTube videos — do whatever it takes to bring these crucial issues to the forefront of public awareness.

The world needs your insights, your knowledge, and your guidance. So don't wait for tomorrow. The time to act, to say something, to do something, is now.

With the unique perspective you offer, we can forge a future where AI serves as an instrument for enhancing human mental health and wellbeing, instead of undermining it. Because if we're going to navigate the choppy waters of the AI era successfully, we're going to need all hands on deck — and that includes you. The future of AI and humanity depends on it.

-Joel

Do you think we’re asking the wrong people about the future of AI? Who else do you believe should be part of the conversation? Leave your comments below or tweet me at @ProfJoelPearson to share your thoughts. And please share this with anyone you think could benefit from reading it!

I'd love to see you or other specialists in cognition get asked a bit more about this stuff in lieu of the CEOs and many of the critics. I find many of both the boosters and the critics to be deeply mistaken in how they either think the brain or the LLM's work. In particular it seems a very common mistake on both sides to imagine that there are "occasional hallucinations" when in fact the whole thing is "hallucination" if you want to look at it that way. Some critics who do understand this then think that it invalidates the whole utility of the field but that (seems to me) to be wrong on two important counts: these things are producing outputs that are statistical predictions, as such they're just as "right" or "wrong" as the mean of a set of numbers is. It could be useful but you don't want to be, as the old joke has it, "the statistician who drowns crossing a river that averages 2 foot deep".

But by the same token, the idea that humans aren't ALSO just hallucinating all the time seems bizarre to me, especially as an aphantasic. You could look to how people imagine, for example, the racial mix of the Roman or Victorian age, to see how much they are just doing what the LLM's are doing with the added conviction that comes from them having envisaged this thing that they actually have no direct knowledge of.

I'd really like to see someone provide a more nuanced take than the simplistic Pro- or Anti- positions that are prevalent.

I should add that some critics, like Prof Emily Bender don't seem to make these mistake but neither are they pushing back against common assumptions of greater reliability in humans AFAICT.

"So, here's my rallying cry to all the psychologists, neuroscientists, and human behaviour experts out there:" ?

Everyone should participate and speak their mind, AI effects everyone. Any specialist, no matter which field he specializes in has all been taught the same narratives, it's like writing a book and printing millions thereof.

The world has transformed into a woke culture and a world of entitlement and many of these so called specialists you mentioned has become part and parcel thereof.

Not because they want to, but because everyone else does, the collective mind or should I say the adaptation of a collective narrative.

As for AI, it can never become anything more than the narrative that it's being fed all based on what their creators wants it to advocate.

It's like a super fast information retrieval machine fact checking to make sure it fits the narrative of it's creators spoon-feeding those that allow it too.

AI will and already are following the same trend as the media, only super fast and far more efficient.

And whether AI leans to the left or right is determined by people such as Mark Zuckerberg, Bill Gates, George Soros and the likes of them.

After all, AI is all about the algorhytms that's fed into it.

Blaming AI is like blaming a car for reckless driving instead of the driver or the programmer if it's automated.