Does AI Personalisation Make Us Nearsighted Or Farsighted?

Unpacking the paradoxical myopia of AIs.

Hello dear reader,

Imagine this: You’re exploring a new city and are looking for somewhere to eat. The thought of trawling through hundreds of Google reviews sounds like a massive headache that isn’t exactly conducive to holiday relaxation.

So, instead you enlist ChatGPT to whittle it down to the top 30 options. Because this isn’t your first rodeo, you ask it to give you a diverse mix of different cuisines, styles and budgets. “Surprise me!” you say.

You wind up with 10 Thai restaurants (your favourite), a medley of Middle Eastern and Mediterranean fare, some American style dining and two options that aren’t even in that city (the cost of doing business, right?)

It might seem like a pretty solid cross-section, given the thousands of possible options in that city. But, here’s what you’ve unknowingly missed out on:

That Sri Lankan joint with a line around the block (because you once told ChatGPT you don’t like coconut), an entire food night market and a once-in-a-lifetime Michelin-starred dining experience it decided you can’t afford.

This might seem like a pretty harmless scenario. After all, chances are you’ll just stumble upon something better along the way. But, it points to a bigger issue with this technology: What innovation theorist John Nosta calls the “Paradoxical Myopia” of AI personalisation.

AI personalisation: An echo chamber or a house of mirrors?

In a recent article in Psychology Today, Nosta states:

This "paradoxical myopia" encapsulates the irony wherein AI systems, designed to enhance cognitive engagement, inadvertently foster a form of short-sightedness. As AIs become more adept at predicting user preferences, they may overfit to individual tastes, reducing the likelihood of serendipitous discovery.

For all its pitfalls, there’s no doubt LLMs excel at personalisation. There’s a reason they’re used in everything from your Spotify Wrapped to your Uber Eats recommendations.

This technology gets to know you — fast — and tailors its recommendations and responses to what it thinks you want. And, that’s kind of the point.

See, I would argue that this myopia is paradoxical, but not in the way Nosta is talking about.

Anyone with a decent level of technological literacy knows that the answers to their ChatGPT queries are going to be heavily skewed by bias. Sure, it might scan through a greater breadth of source material than Google — including social media, reviews, videos and podcasts.

But this just means more noise, not more nuance.

We don’t use AI because we truly want to synthesise a broad range of perspectives — including ones that clash with our own. We use it mostly because we want to get clear, ‘correct’ answers (ie. those that confirm our own bias) without all the fluff.

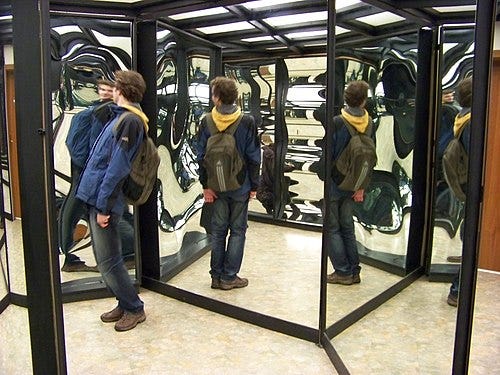

You’ll often hear of this creating an ‘echo chamber’ — where well-worn ideas bounce back at you over and over again, think social media accounts. But, you could think about it as a wacky house of mirrors, where reflections stretch and warp reality in unexpected ways.

Some mirrors make you taller, others compress you into a cartoonish distortion, and the further you try to see, the more exaggerated everything becomes. It can create the illusion of objectivity or omniscience, but the image it presents is shaped by the angles and distortions of the training data.

AI’s myopia isn’t paradoxical because it goes against the goals of LLMS. It’s paradoxical because its also plagued by hyperopia — pulling from a vast, almost limitless pool of knowledge, but without always perceiving depth correctly.

How to foster greater cognitive diversity

In other words — when we become overly reliant on AI, we can’t see the forest for the trees, or the trees for the forest.

It renders us both nearsighted (so we don’t find out about that incredible rooftop bar on the 40th floor above us) and farsighted (we completely skip over that hole-in-the-wall restaurant that was right next to us the whole time).

So, how do we view the world in a more bi-focal way, opening ourselves up to diverse perspectives from both near and far?

The key when it comes to AI is to be very clear about what you want. You need to add in parameters and goals and other things you would never think to mention to a human, because they would be obvious, eg, walking distance is important, I also appreciate surprises, so anything that’s really good, but clashes with prior likes would also be great etc…

These might seem obvious, but the AI won’t know unless you tell it. Some AI models will now ask follow-up clarification questions, like ChatGPT’s deepResearch, this really helps as it prods you to add in the extra details, you may have missed because you thought they would be obvious.

I like to always add in the larger content or forrest, for the restaurant, that might be something like this is an important meeting so I want to impress the person I'm eating with by the choice of restaurant, so maybe cool trendy areas might be relevant etc. or I want this to be a romantic dinner, so try and find a place that would facilitate that.

They other key here is to interact, if you don’t like what you get first go, add more info, experiment with different parameters, such experimentation is the key to fine tuning your working relationship with AIs. In other words, you have to get to know it! Just like you would with a new employee.

Hope you find this useful!

Have a wonderful and productive week!

All the best Joel

Mental meanderings

Do you think AI personalisation makes us nearsighted, farsighted or a combination of both?

Does the confirmation bias present in LLMs deter you from using them?

How do you go about finding somewhere to eat when you’re in a new city?

Let us know in the comments below!